Lixiao Huang, Ph.D. | Human Factors and Applied Cognition

|

|

I am an Associate Research Scientist Center for Human, Artificial Intelligence, and Robot Teaming (CHART) under Global Security Initiative at Arizona State University. I completed my Ph.D. in Human Factors and Applied Cognition from North Carolina State University in 2016 and Postdoc in the Humans and Autonomy Lab (HAL) at Duke University in 2018. I am the founding chair of the Human–AI–Robot Teaming (HART) technical group at Human Factors and Ergonomics Society, advocating cutting-edge HART research, interdisciplinary collaboration, and advanced testbeds and analytics. I have worked on ARL, ONR, and DARPA research projects as a research lead. My research interests include (a) Human–AI–Robot Teaming effectiveness; (b) Humans’ responses modeling (i.e., emotional states, behavioral patterns, and cognitive processes) to robots and technologies, especially emotional attachment, intrinsic motivation, coordination, trust, and metacognition; (c) The design of human-robot systems using Human Factors methods to make AI and robots effective, safe, user-friendly, trustworthy, and engaging.

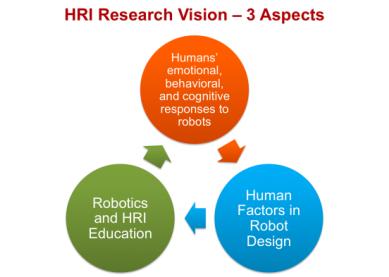

RESEARCH VISION I study Human-Robot Interactions (HRIs) to improve quality of life and performance through the ergonomic design of robots and technologies. My research vision consists of three aspects: (a) the exploration of humans' emotional, behavioral, and cognitive responses to robots, especially emotional attachment, intrinsic motivation, and trust in autonomy, (b) the application of Human Factors (HF) principles to human–robot interface design, and (c) the effective education of robotics and HRI. How do humans respond to robots and technologies behaviorally, emotionally, and cognitively?

While many research questions in HRI are still new, it provides an effective approach to explore humans’ responses to existing robots. Behavioral responses refer to gestures and movement patterns. Emotional responses refer to the valence and arousal of feelings toward a robot. Cognitive responses refer to processes like attention, memory, and metacognition. Take humans’ emotional attachment to robots for example. A robotics education instructor observed that students got very sad when they were asked to dismantle their robots at the end of the semester. She asked me whether that was emotional attachment. My research confirmed students’ positive emotions toward their robots, but the negative side was not as strong as expected from normal emotional attachment between humans. After further examining the definitions of attachment and its influencing factors, I proposed a generalized emotional attachment model that can explain various emotional interactions between humans and other humans, humans and animals, and humans and nonliving entities. The findings suggested design principles of robots to increase humans’ intrinsic motivation and positive emotions in HRI—design for emotional attachment. Exploration of humans behavioral, emotional, and cognitive responses pave the road for further robot design and HRI experiments. How can we use human factors to improve the design of human–robot interfaces? The design of human-robot interfaces is critical in health care, education, transportation, and the military. The design process requires HF methods in different phases. My doctoral training in HF equips me with a systematic methodology in interface development and usability testing. My postdoctoral work at Duke focuses on humans’ interactions with autonomous systems, such as unmanned aerial vehicles (UAVs) and unmanned underwater robots (UUVs). I study the impact of design features on humans’ trust in autonomy, as well as the impact of increasing automation on humans’ performance and training requirements. I have the privilege to use the software platforms from Duke to continue future research: Research Environment for Supervisory Control of Heterogeneous Unmanned Vehicles (RESCHU) and Human-Autonomy Interface for Exploration of Risks (HAIER). How can we use robots in education more effectively? Current K-12 and college students will become the major users and designers in future robotics industry, so we should enhance their awareness, knowledge, and skills in robotics from now on. Robotics clubs, educational robot toys, service robots, and robotics classes in higher education are the popular venues for studying the effectiveness of using robots in learning robotics, HRI, and other subjects. My psychology background and graduate research assistant experience in the department of Education equip me to conduct this line of research well. So far I have studied problem-solving skills, metacognition, engagement, and motivations. I am also interested in students’ learning process and robot design features that could motivate students to interact with robots and to learn effectively through the interactions. While volunteering at middle school and high school robotics tournaments on robot design and robot performance evaluation, I observed that students employed HF design principles in their robot building without being aware of the underlying theory. I am interested in studying and promoting the application of HF design principles in robotics tournaments to help middle school and high school students improve their robot designs and achieve high performance. RESEARCH METHODS I use multimodal analyses to explore one research question from different perspectives. Below is a list of research methods that I have used: Experiments, interviews, surveys, and naturalistic observations, verbal data analysis (essays, interviews, chat text, and blog posts), video image analysis (facial expressions, gestures, and behavioral patterns), and usability testing (task analysis, heuristic analysis, prototyping, error analysis, and mental models). |